Container Orchestration: Definition, Components, Working, Tools & Use Cases

Container orchestration has become the backbone of running modern, containerized applications at scale. This article explains what container orchestration is, the key components of an orchestration system, and how it works in real environments. It distinguishes Docker vs container orchestration, outlines the most common use cases, and reviews the best tools and platforms available today. You’ll also see how Kubernetes orchestration works, how Kubernetes security shapes your design, and how cloud and container management platforms support orchestration in practice.

What Is Container Orchestration?

Container orchestration is the process of using a container orchestration tool or platform (such as Kubernetes) to automate the scheduling, scaling, deployment, and recovery of containerized applications across a cluster of nodes based on a defined desired state. It ensures container workloads are placed on the right machines, kept healthy, and exposed with built-in load balancing and high availability, so platforms like Docker can run reliably at scale in modern DevOps and cloud environments.

What Are the Key Components of a Container Orchestration System?

A container orchestration system is made up of several core building blocks that work together to deploy and manage workloads reliably at scale.

The following points focus on key components of container orchestration and how they fit into a typical container orchestration architecture.

- Control plane or orchestration platform – The central brain of the container orchestration platform that stores cluster state, schedules workloads, and exposes an API; in managed Kubernetes on AWS or Google Kubernetes, this includes the API server, scheduler, and controllers.

- Worker nodes – The machines (physical, cloud, or virtual machines) that run container (lightweight packages of an application and its dependencies), pull container images, and execute each container deployment under the control plane’s instructions on top of a shared operating system.

- Cluster state and configuration store – A consistent data store that tracks desired versus current state for workloads, networking, and policies so the system can reconcile and heal automatically.

- Networking and service discovery layer – Components that route traffic between containers and expose stable endpoints; for example, a Kubernetes service in Kubernetes or built in service discovery in Docker Swarm inside the orchestration platform.

- Storage, secrets, and configuration management – Integrations that attach persistent storage, environment configuration, and secrets to workloads in a way that remains portable and aligned with containerization practices, where applications and their dependencies are packaged into isolated, repeatable units.

- Observability, policy, and access control – Logging, metrics, tracing, and policy engines that secure and govern how applications are deployed and managed across the platform.

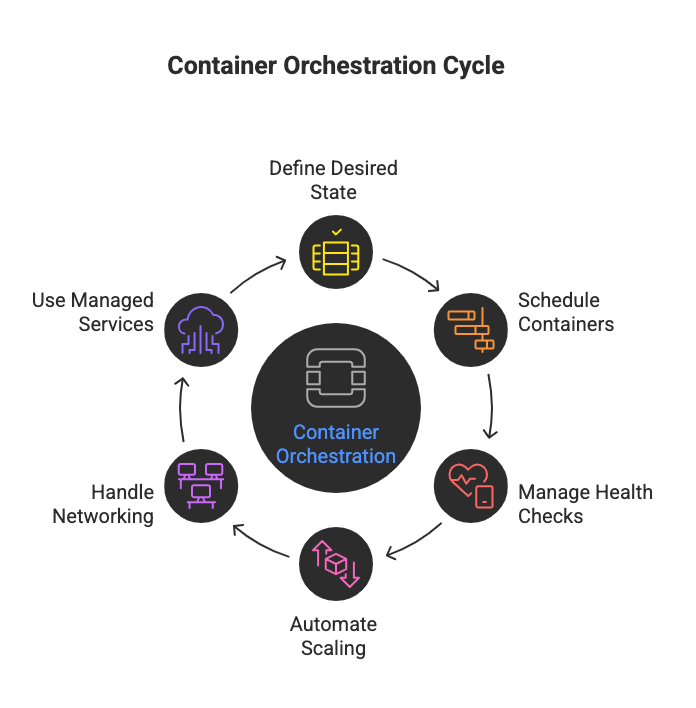

How Does Container Orchestration Work?

Container orchestration works by using a container orchestrator (most often Kubernetes) to constantly align a declared desired state for your apps with what is actually running across your container infrastructure. A simple container orchestration example is a web front end and database running in separate containers across different nodes while the platform keeps the required replicas healthy and load balanced across the cluster.

The following points focus on how container orchestration works in practice.

- You define the desired state in configs, describing which docker containers or container based services should run, how many replicas, and which container images to use across the kubernetes cluster—versioned templates that package the application code and its dependencies.

- The orchestration engine (for example, tools like Kubernetes, Docker Swarm and Apache Mesos) schedules containers onto nodes, pulling images from a container registry a centralized repository for storing and distributing versioned container images and starting each container service.

- The orchestration tool manages health checks and restarts; if a container fails, it launches a new container so the target replica count stays met across the cluster.

- Container orchestration automates core container management tasks such as scaling, so orchestration allows organizations to use container orchestration to “allows you to scale container” workloads horizontally instead of tuning each single container manually.

- Networking and exposure are handled automatically; container orchestration tools like Kubernetes can automatically expose a container via a Kubernetes service to route traffic from the internet to the right pods.

- On a cloud platform such as Google Cloud Platform, Google Kubernetes Engine provides a managed Kubernetes service that delivers managed container orchestration, so you use Kubernetes as a container orchestration solution without operating the control plane yourself. This is a common pattern for container orchestration in cloud computing, where the cloud provider runs the control plane for you.

Docker VS Container orchestration: What is the difference?

Docker focuses on building and running containers on a single host, while container orchestration coordinates those containers across multiple machines to deliver scalable, highly available applications.

What are the benefits of Container Orchestration?

Container orchestration delivers clear, measurable advantages for teams running containerized applications at scale, from reliability to cost control.

The following points focus on the main benefits of container orchestration.

- Improved reliability and self-healing – The orchestrator automatically restart failed containers, reschedules them on healthy nodes, and keeps the desired replica count, reducing manual firefighting and downtime.

- Automatic scaling and elasticity – Policies and metrics drive horizontal scaling, so the platform can add or remove container replicas during traffic spikes or lulls without manual intervention.

- Consistent deployments across environments – The same specs and manifests are applied across dev, staging, and production, making rollouts, rollbacks, and promotions more predictable and auditable.

- Better resource utilization and cost efficiency – The scheduler packs workloads efficiently onto nodes, so CPU and memory are used more effectively, which can lower infrastructure spend at scale.

- Stronger isolation and security controls – Namespaces, network policies, RBAC, and workload policies give you fine-grained isolation between teams, apps, and environments, improving security posture.

- Faster delivery and DevOps automation – Integrating orchestration with CI/CD enables frequent, automated deployments, blue/green or canary releases, and safer experimentation without changing underlying hosts.

- Portability across clouds and on premises – Containerized workloads plus standardized orchestration APIs make it easier to move or replicate applications across different clusters, clouds, or data centers with minimal rework.

What Are the Challenges of Container Orchestration?

Container orchestration delivers strong benefits, but it also introduces non-trivial challenges that teams must handle to run it safely and efficiently at scale.

The following points focus on key challenges of container orchestration.

- Platform complexity – Designing, operating, and troubleshooting a Kubernetes or similar platform is non-trivial, especially around control plane health, DNS, networking, and upgrades.

- Steep learning curve – Teams must understand containers, orchestration concepts, YAML, RBAC, networking, and observability before they can use the platform safely and efficiently.

- Networking and security – Managing CNI plugins, NetworkPolicies, ingress, TLS, identities, and secrets across many services is difficult and easy to misconfigure.

- Multi-tenant isolation – Safely sharing clusters across teams, namespaces, and environments while enforcing least privilege, quotas, and governance is hard to get right.

- Resource management and costs – Misconfigured requests/limits, autoscaling, and node sizing can cause noisy-neighbor issues, throttling, or unexpectedly high cloud bills.

- Stateful workloads – Running databases and stateful services on orchestration requires careful storage, backup, and failover design; it’s more complex than stateless apps.

- Observability and debugging – Distributed logs, metrics, traces, and ephemeral pods make it harder to debug issues compared to traditional monolithic servers.

- Upgrade and change risk – Upgrading clusters, runtimes, CNI, and workloads introduce compatibility and regression risk, especially without strong automation and testing.

What Are the Most Common Use Cases of Container Orchestration?

Container orchestration is most commonly used wherever teams need to run many services reliably, not just a few containers, and want the core benefits of container orchestration such as scale, uptime, and consistency across environments.

The following points focus on the most common use cases of container orchestration.

- Running microservices in production – In a microservices architecture, kubernetes orchestration or similar container orchestration tools help keep services scheduled, healthy, and updated across the container platform.

- Autoscaling cloud native workloads – On a cloud service, container orchestration allows applications to scale out and automatically based on demand, which is one of the biggest benefits of container orchestration.

- High availability for customer facing apps – Container orchestration can help restart failed pods and redistribute traffic, so a critical container is running even when nodes fail.

- Consistent environments across dev, staging, and prod – Using a container management tool, teams apply the same specs to all container environments, something that would be hard or impossible without container orchestration at scale.

- Managed orchestration in the cloud – With a managed service for kubernetes container orchestration, providers run the control plane while engineering teams focus on applications instead of low-level operations.

What Are the Best Container Orchestration Tools and Platforms?

The best container orchestration tools and platforms are those that reliably run many containers using automation, scaling, and self-healing across clouds and data centers. When you build a container orchestration tools list, you usually start with Kubernetes and managed Kubernetes services, then compare simpler options like Docker Swarm for smaller clusters.

These points cover the best container of orchestration tools and platforms.

- Kubernetes – An open-source container orchestration system and, in practice, Kubernetes is the most popular choice; orchestration works by managing pods, services, and deployments so orchestration can automatically reschedule workloads and expose a container to the internet via a Kubernetes service.

- Docker Swarm – A simpler alternative in the Kubernetes and Docker Swarm landscape that tightly integrates with Docker, making it easier for small teams to simplify container operations while still gaining core automation and clustering.

- Managed Kubernetes services – Offerings such as Azure container platforms, EKS, and GKE provide a container orchestration service as a managed service, so the provider runs the control plane while container orchestration allows organizations to focus on apps, not infrastructure.

- Enterprise and hybrid platforms – Commercial distributions and platforms as a service built on Kubernetes or other popular container orchestration engines provide opinionated stacks for elastic container workloads, advanced policy, and integrated tooling; this is where container orchestration makes it easier to standardize operations across multiple environments.

How Does Kubernetes Container Orchestration Work?

Kubernetes Container orchestration works by continuously reconciling the desired state you declare for your workloads with the actual state of the cluster, so applications stay scheduled, healthy, and reachable without manual intervention.

Here are the key steps in how Kubernetes container orchestration works.

- You define the desired state in YAML objects such as Pods, ReplicaSets, Deployments, and Services (K8s), which describe which containers run, the replica count, and how they are exposed.

- The API server stores this configuration and acts as the single source of truth for the cluster.

- The scheduler assigns Pods to nodes based on resource availability and constraints.

- The kubelet on each node starts and monitors containers via the container runtime, the low-level software that pulls images, executes container processes, and restarts them when needed.

- Controllers manage scaling, rolling updates, and self-healing by recreating Pods if they crash, or nodes go offline.

- Services provide stable virtual IPs and load balancing across healthy Pods, ensuring traffic always reaches a working endpoint.

- In Kubernetes, container orchestration refers to this continuous control loop that detects drift from the desired state and automatically corrects it.

How Does Kubernetes Security Affect Container Orchestration Design?

Kubernetes security shapes container orchestration design because every decision about how you schedule, isolate, and expose workloads has to respect least privilege, segmentation, and compliance from day one.

Here are the keyways Kubernetes security affects container orchestration.

- Namespace and tenancy layout – You design namespaces per team, app, or environment to isolate workloads, then align deployments and services to that structure to reduce blast radius.

- RBAC and service accounts – You restrict who and what can change the cluster by assigning scoped roles and service accounts to CI/CD pipelines, operators, and apps, which govern how workloads are deployed and scaled.

- Pod hardening and policies – You enforce run as non-root, minimal Linux capabilities, and admission policies that block unsafe specs, which directly changes how Pod manifests and container images are built.

- Network and exposure controls – You apply NetworkPolicies, ingress rules, and strict service types so only approved traffic flows between Pods or out to the internet, which influences microservice boundaries and container entrypoint (the initial command that starts a container’s main process).

- Secrets and configuration – You rely on Secrets, external vaults, and config maps for credentials and config, so orchestration templates must be designed to inject sensitive data securely at runtime.

How Do Container Management Platforms and Cloud Services Support Orchestration?

Container management platforms and cloud services support container orchestration by running the hard parts of the platform, so teams can focus on applications, not plumbing.

The following points focus on how they support orchestration.

- Managed control planes – Cloud services provide managed Kubernetes and similar offerings, running the API server, scheduler, and controllers, so orchestration is delivered as a service, not something you operate yourself.

- Integrated networking and security – Platforms connect containers to cloud networking, load balancers, identity, and policy, so orchestration can enforce traffic routing, encryption, and access control using native cloud features.

- Image, observability, and policy tooling – Built in container registries, logging, metrics, and policy as code integrate with the orchestrator, making it easier to control images, monitor workloads, and enforce guardrails at cluster scale.

- Autoscaling and cost optimization – Orchestration ties into cloud autoscaling and billing, allowing platforms to adjust nodes and replicas automatically based on demand while keeping infrastructure spend under control.

FAQ’s

Q1. Is container orchestration necessary if I only run a few containers?

Ans: If you run fewer than 5–10 containers on a single host, you usually do not need full container orchestration; basic Docker commands or simple scripts are enough. Orchestration becomes necessary when you add multiple nodes, need high availability, or deploy updates frequently across environments.

Q2. Can I use containers in production without Kubernetes?

Ans: Yes. You can run production workloads using Docker on a single server or smaller tools like Docker Swarm. However, once you need cluster level scaling, self-healing, and consistent rollouts across many services, most teams move to a full orchestration platform such as Kubernetes.

Q3. Does container orchestration only work in the cloud?

Ans: No. Container orchestration works on premises servers, private clouds, and public cloud. Many organizations use the same orchestrator (for example Kubernetes) in a hybrid model, with some clusters on physical hardware and others on cloud providers.

Q4. Can stateful applications run under container orchestration?

Ans: Yes. Stateful apps run under container orchestration by using persistent volumes, storage classes, and patterns like StatefulSets in Kubernetes. The key requirement is to design explicit data durability, backups, and failover, instead of relying on the local disk.

Q5. How does container orchestration affect cloud costs?

Ans: Container orchestration can lower costs by bin packing workloads on nodes and scaling replicas when traffic drops. At the same time, misconfigured autoscaling, oversized resource requests, or idle clusters can increase spend, so you still need active capacity and cost management.

Q6. What skills do teams need to operate container orchestration safely?

Ans: Teams need solid Linux fundamentals, networking basics, and a clear grasp of containerization concepts. For Kubernetes, they also need to understand YAML manifests, RBAC, namespaces, and monitoring and troubleshooting of clusters and workloads.

Q7. Is container orchestration suitable for regulated or security sensitive environments?

Ans: Yes. Container orchestration is widely used in regulated industries as long as you enforce strong security controls: hardened base images, strict RBAC, network policies, audit logging, and integration with enterprise IAM and secrets management to meet compliance requirements.

.webp)

.webp)

.webp)

%20(1).png)

.svg)

.svg)

.svg)

.png)